Data Modeling pt.4 / Database Normalization pt. 1

Digestible Data Modeling Blogging

Data Modeling is an important skill for any data worker to understand. Data Engineers in particular should understand this topic and be able to apply it to their work.

This post is a continuation of a data or dimensional modeling series. This series will have 5 parts. For pt.4 , we will go over the start of a Wikipedia page on Database normalization.

This Data Modeling Series pt.4 == Database Normalization Series pt.1

Data Modeling pt.4: Dimension Normalization

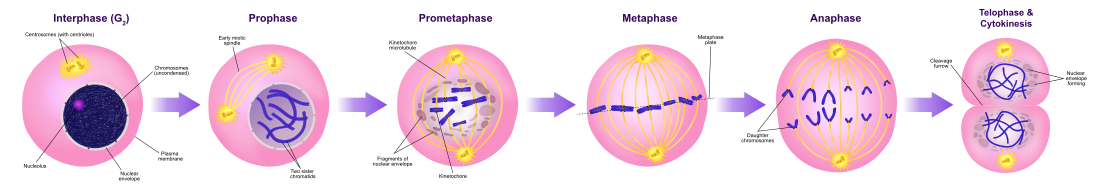

picture for the thumbnailA rough definition of normalization is; Splitting up schemas, tables and columns. That reminds me of mitosis, or the splitting of cells.

Normalization pt. 1: Description

Wikipedia page: Database Normalization

Database normalization is the process of structuring a relational database[clarification needed] in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity. It was first proposed by Edgar F. Codd as part of his relational model.

We have several terms here I want to define in my own words:

Relational Database

Data Modeling is the blueprint and the Relational Database is the actual physical building.

Normal Forms

You can kind of think of Normal Forms as technological tiers for data modeling. First Normal Form (1NF) is like bronze age for database structure or data modeling. 2NF can be seen as a more advanced age. As normal forms increase in number, the strictness of data modeling and object (schema, table, columns) splitting grows.

We won’t go through the actual process of normalization in this post, but definitely in future database normalization posts.

Data Redundancy

Normalization’s goal is to not have any data redundancy. This is where storage costs and normalization have an inverse relationship. The more expensive it is to store data, the less we want to duplicate it. Therefore, we want to normalize our database, splitting up our database objects.

Data Integrity

Data Integrity is one of those ideal goals for anybody working with databases. Data Integrity means accuracy to not only real life but also to other data stored in the same database.

Meaning, we don’t want orphaned foreign keys that don’t point to anything.

We do want all unique identifiers to give us access to everything we might want to know about what the identifier is pointing to i.e. a student ID should tell us a student’s last name, first name, etc.

The second part of the opening description

Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of synthesis (creating a new database design) or decomposition (improving an existing database design).

More terms to define because tech loves to have a billion names for `table`.

Attributes = Columns

Relations = Tables

Dependencies

Lots of constraints; never duplicate primary keys or unique identifiers. Every foreign key has a primary key to point to.

Synthesis is creating a new database design. Start from scratch!

Decomposition

The process of increasing normal forms — we’ll cover more of this in future normalization posts

“Improving an existing database design” here is a biased phrase.

Biased because decomposition, or increasing Normal Forms, is only sometimes desired. If we want to improve data integrity and reduce data redundancy, then yes, we decompose a database design and improve it.

Finale

On one end, you could have one table holding all data. On the other end, you could have a highly normalized database with many tables with many tiny scopes (or grains). A good schema will be somewhere in between these two extremes.

We want our data organized in a logical way, yet we don’t want our analysts to have to write too many JOINs in their queries. Too many JOINs means slower queries. Slow queries may mean unhappy customers, higher computational costs, or slow analyses.

On the other hand, too few JOINs means that duplicate data being stored, which can be a strategic choice. Strategic because you pay more in storage costs but less on engineering your data warehouse and query performance. This is called de-normalization and can be done on purpose sometimes.

I hope the basic concepts of normalization have been covered. I will continue covering this Database Normalization Wikipedia page, in its own series, once I write one more post on Data Modeling! Thanks for reading.