Data Engineering Hierarchy of Needs

"Just My Thoughts" - Jay Z

I rounded my first year in a named Data Engineering role in January 2020. I have been writing ETL scripts for 5 years but haven’t been exposed to these needs as deeply as the last 12 months. As I finished these 12 months, I have been thinking about the needs companies have at different levels of Data Infrastructure maturity.

In these last 12 months, I learned different Data Infra needs for different kinds of Companies:

Small

I talked to an Analyst in my local metropolitan area about their small company which had Excel based processes and wanted to move towards an open source language like Python or R.

To bridge between working entirely in spreadsheets and an ERP software implementation, we are currently looking into ETL software to prep & blend data and then something to analyze like R or Python.

— Analyst in my local metro area

Medium

I also learned about the mid-sized company (1500+ staff) that has Airflow infrastructure in place, and a small army of analysts, but a lagging Data Warehouse. They don’t care so much about Spark as much as Automation and future-proofed Data Modeling.

Large

I learned about Netflix and their Data Engineering’s rightful obsession with Spark. With the amount of data they move around, they want their processes, from moving data to instantaneous Machine Learning models, to be fast.

There is a lot of nuance and gray area that I’m leaving out, but these generalizations should paint a picture of differing needs.

Vocabulary:

Spark

a tool that makes data processing faster by splitting up the processing across multiple machines — aka parallel computing.

Parallel Computing Framework that can be used in Python, Java, or Scala

Data Warehouse

A Summary Database that has data organized and ready, or nearly ready, for analysis.

Data Modeling is the process of how Database objects; Schemas, tables, columns, etc. are organized in relation to each other. This means normalization, table relationship cardinality, and ultimately; revenue attribution.

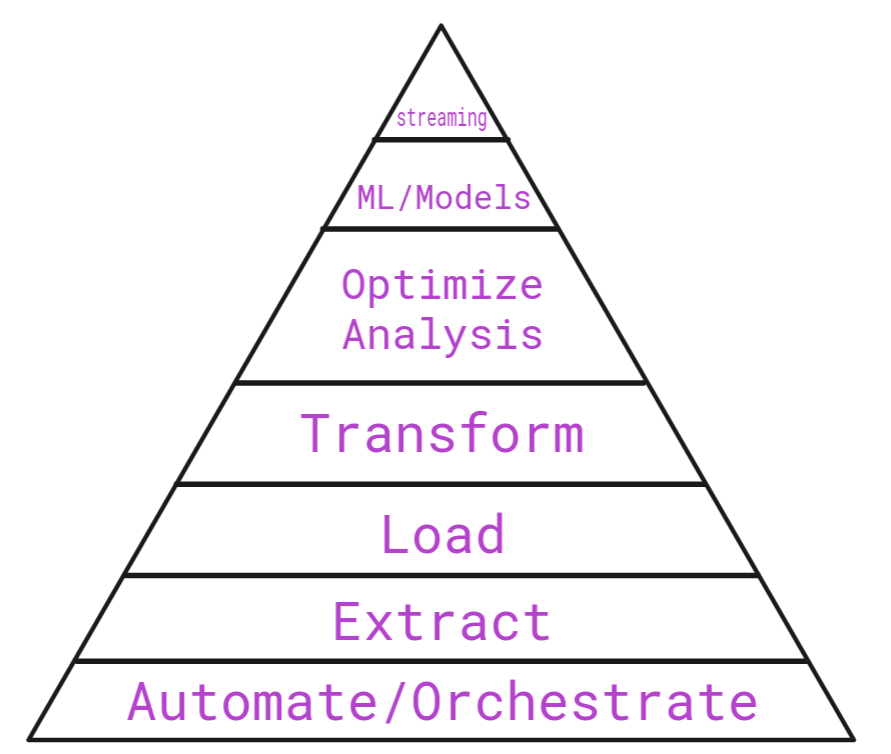

My Twist on Monica Rogati’s Pyramid

Any mental model like this is going to have gaps, or a lack of nuance. Finer details are likely imperfect but I believe the general concepts to be true.

In one sentence, I describe what a Data Engineer does:

Data Engineers automate Data Processes in order to make Analysts more efficient and effective.

Automation/Orchestration is a catch-all for reproducibility-driven development. Meaning, no data is touched without a transparent and automated process.

0. Automation / Orchestration Software

Excel is a global standard and intuitive tool for analysis. However, it can often be the first tool of choice for analysts who want to automate data wrangling. Let’s say we have to combine two different spreadsheets but remove duplicates. This can easily be done in the Data tab of Excel as long as you have the combined datasets highlighted. The problem with doing this through clicks is that this combination and deletion of duplicates now depends on a single person knowing exactly what to do and how to do it. This work is simply not efficient for growing data work needs.

Here is a wonderful example of the anti-thesis of transparency and reproducibility

As a company grows into a small data infrastructure, it should move towards an ETL software that provides them efficiency and transparency. This means that even if you’re not writing 100% code as in Airflow, you still want the following in order to achieve an acceptable level of transparency;

source control

be able to view source ETL/ELT code at any time

logging set up so that debugging broken pipelines is easier

GitLab as an example of End to End Analytics Automation with DataOps:

<Currently Relevant technologies>

ETL:

Airflow

Informatica

Matillion

SSIS - please don't use SSIS until they fix source control.

CI/CD:

Docker

Ansible

Yarn

PyEnv1. Extraction

Extraction is the first strictly data-centered process. A company must be able to systematically pull data from a business’s 1st and 3rd party apps, databases, or clients/vendors, etc.

Without extraction, there are no ingredients with which to cook with.

<Currently Relevant technologies>

Python Requests

AWS S3 or competitor equivalent

SQL

Python

note: Python and SQL will be relevant to nearly all hierarchy steps2. Load

This is the stage of Data Infrastructure which can be called a Data Lake. This scenario is a company’s infrastructure built from the bottom up. They have collected data from all relevant sources, and loaded them up in a database for their analysts to use. However, the data may be in a rough state. Analysts have to write complex queries in order to do that analysis that they want, but they have all relevant data available to them in a single location.

<Currently Relevant technologies>

Snowflake

Redshift

dbt

Airflow3. Transformation

There is some overlap with optimizing analysis, as Data Engineers transform with data in order to make it easier for Analysts to do their work.

However, I did separate the two because I want to distinguish Transformation as work that can be kept in SQL. This is an arbitrary distinction, but a good enough distinction.

An example of transformation; using regex for Social Security Numbers (SSNs). SQL is an immensely versatile tool that can use regex for sensitive data. Obfuscating, or not exposing raw, SSNs with regex is a classic example of fundamental transformation in data processes.

<Currently Relevant technologies>

SQL

dbt4. Optimize Analysis

This is where we start to get into Spark territory. A lot of times, data gets too big for queries to run in a timely manner for urgent business needs.

An Example

Sales Cycles are fast for a company and their sales people need the most up to date metrics on a very large dataset. The metrics get stale after about an hour but it takes 3 hours to calculate. Spark allows you to take your 3 hour job, split it up into parts that multiple computers can work on and then combine your results together at the end.

I must emphasize: Most companies are not at this stage.

Yet, Spark is over-emphasized by recruiters (in my opinion). Small-Mid Sized companies would be served better by providing transparent and robust data workflows that serve fundamental metrics; bar charts, revenue, revenue attribution, etc.

Large companies like Netflix need Spark because the amount of machines that need to work flawlessly between you pressing play on your remote and their streaming to work, is astounding. Most companies are not Netflix.

<Currently Relevant technologies>

Spark SQL

Spark Core

Presto5. Machine Learning (ML) and Models

Automating Machine Learning models is achievable in Airflow. Serving up predictions, in an automated way, to Analysts is an advanced need for companies. This follows Monica Rogati’s AI Hierarchy of Needs. First we have to collect quality data in order for Statistics to be of any business use.

<Currently Relevant technologies>

Spark MLLib

Airflow ML Operators

Automated Visualization - Tableau, Power BI6. Data Streaming

Real-Time, or near Real-Time metrics sounds so cool. Whenever I think of Data Streaming, I think of the portrayal of a tech startup in Anne Hathaway’s “The Intern” (2015). The characters in the movie can see purchases in real time, react to problems that customers have and solve them before the customer decides to take their money elsewhere.

I’ve seen this need really only come up in sophisticated, cloud-native, name-brand, companies that hired Data Nerds like me from day one.

<Currently Relevant technologies>

Spark Streaming

KafkaConclusion

Spark gets a lot of shine past level 4 but in my opinion, is not needed by at least 50% of companies looking to built out their Data Infrastructure or Warehouse. There may be differing opinions about how to actually execute each step. However, the principles of transparency and reproducibility should remain constant because they are the foundation for an efficient and effective data team.