This post is geared towards Data Analysts already working with data and looking to pivot to Data Engineering. Especially for Data Analysts who are disillusioned by the promises of the “sexiest job” of the 21st century (2012).

Over-saturation in Data Science

I entered Data Science in mid-2015. I watched talks by Hilary Mason, listened to podcasts by Chris Albon, and in general, was pretty on board with learning applied statistics for a predictive analytics job. I pored over Data Science job openings to make sure I was training for the job market, and for overall trends, as well.

The well-known professionals from 2012 to ~2016 were, for the most part, people from all over. Early on, my sense was that Data Scientists were journalists, political scientists, computer scientists, statisticians, misfits who would give each other the chance, given that skills were present.

Now that it’s 2019, I can say; Skills aren’t enough. Portfolios aren’t enough. Unless you’re well connected in a tech hub.

The higher the supply of junior Data Scientists grew, the more stringent the implicit requirements became. This recent (Aug. 1, 2019) post by Hanif Samad found that most people doing the work of data science in Singapore have at least few years of analytics experience and a Master’s degree. Vicki Boykis wrote another piece, detailing over-saturation in, specifically, junior Data Science ranks. Too many people are being trained with skills that over-fit the current hype about predictive analytics.

So this is where I come in. To build more hype about the next thing.

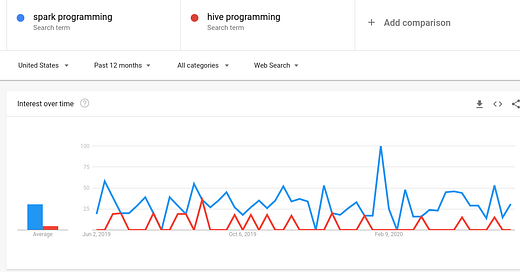

But honestly, by the time Data Engineering reaches max hype, I don’t plan on staying the same worker, and hopefully I can help some people. Also, my guess is that Data Engineering’s max hype won’t be as intense as Data Science’s max hype.

maxhype(DataEngineeering) < maxhype(DataScience)Now. Let’s get hype.

127,281 results

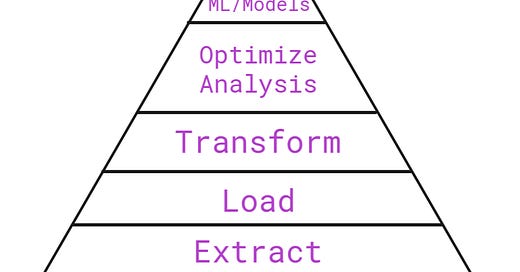

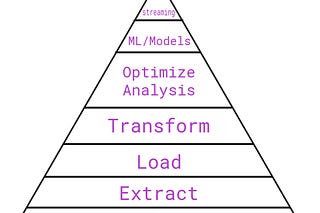

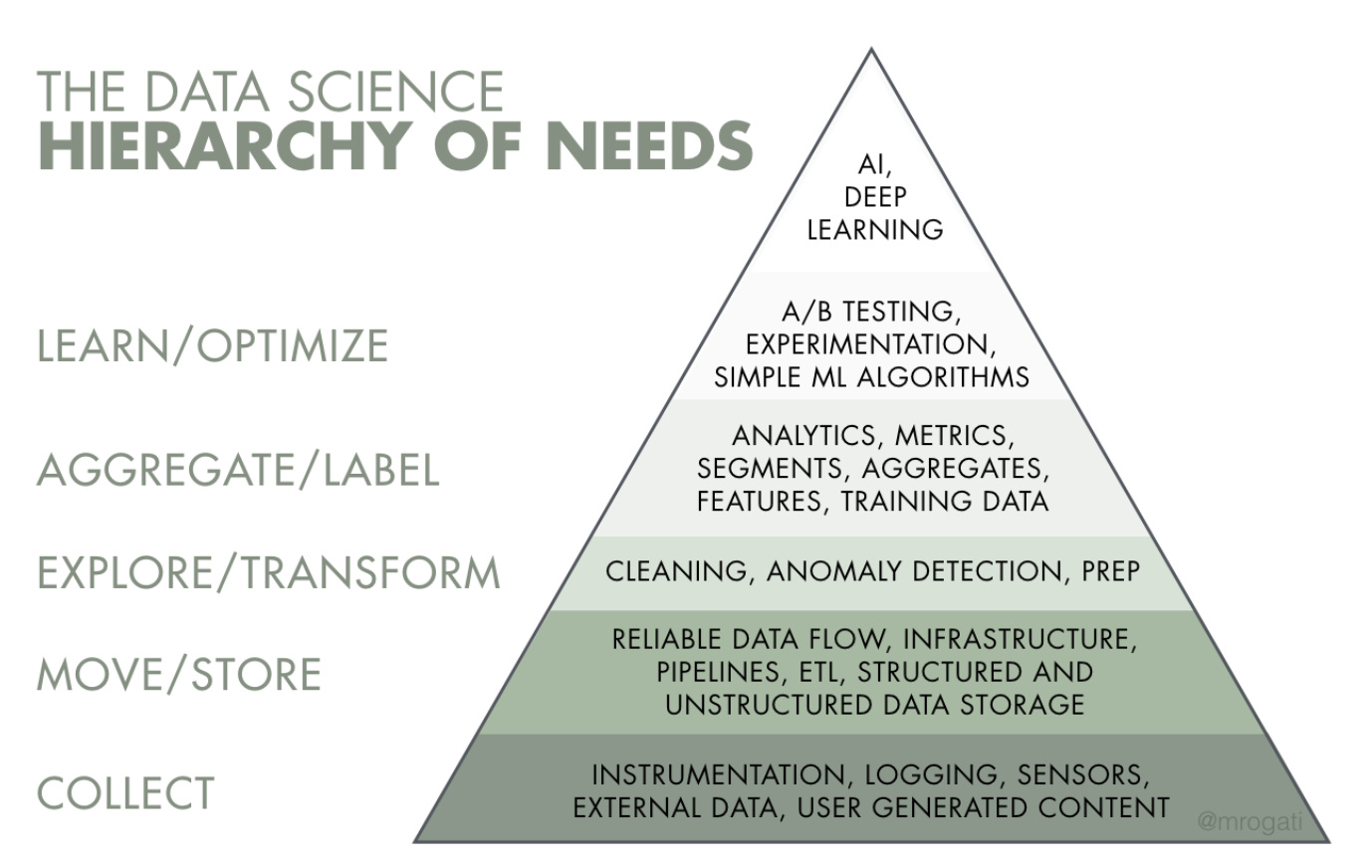

Data Engineering is basically the bottom two/three layers to this triangle.

Source: Monica Rogati’s Medium post “The AI Hierarchy of Needs”

Table of Contents

Technologies are always changing so I want to avoid full-stack anxiety in my readers. This won’t be a laundry list of software to become an expert in, but rather a baseline to learn so that you can figure out the specifics of your career’s path. These fundamental skills will set you on the path to becoming a producer of data, rather than a consumer.

Most sections have a resource list of learning content at the end.

Lingua Francas: Python and SQL

Fundamental Skill: Data Modeling

Fundamental Skill: ETL

Extra Data Engineering Skills and/or Shibboleths

Finale: Core Skills

1. Lingua Francas: Python and SQL

In Data Science, scripting and modeling is split (with overlap) between Python and R. Since Python and SQL are so well known to both data workers and software workers (there’s always overlap), in my opinion, they are the best languages to learn and be hire-able in most Data Engineering teams.

A lingua franca (/ˌlɪŋɡwə ˈfræŋkə/ (listen); lit. Frankish tongue),[1] also known as a bridge language, common language, trade language, auxiliary language, vehicular language, or link language is a language or dialect systematically used to make communication possible between groups of people who do not share a native language or dialect, particularly when it is a third language that is distinct from both of the speakers' native languages.[2]

In order to sell your craft, you must speak the language of that craft.

This Stitchfix Algo blog makes the same argument for SQL, Maintainable ETLs:

The primary benefit of SQL is that it’s understood by all data professionals: data scientists, data engineers, analytics engineers, data analysts, DB admins, and many business analysts. This is a large user base that can help build, review, debug, and maintain SQL data pipelines

I highly recommend building out Lingua Franca skills as a foundation. If you see a growing trend outside of Python+SQL for a specialization that interests you, go for it!

Resource list:2. Fundamental Skill: Data Modeling

Prediction models? No, a different kind.

In Maxime Beauchemin’s important blog post, The Rise of the Data Engineer, he writes:

Data modeling techniques: for a data engineer, entity-relationship modeling should be a cognitive reflex, along with a clear understanding of normalization, and have a sharp intuition around denormalization tradeoffs. The data engineer should be familiar with dimensional modeling and the related concepts and lexical field.

I recommend the ability to build mental models about the work you’re doing, by understand what’s happening at the database level. That means understanding how tables “talk” to each other through primary and foreign keys. It also means understanding what data types you’re working with and what they mean for performance and business questions. It also means understanding normalization.

This is probably the trickiest skill to build because entity-relationship problems aren’t easy to Google. I would call this an intermediate+ data skill, so like with all intermediate skills in all crafts, there isn’t much learn content out there. Most of my `Resource list` for this section can be hard to understand. So tread carefully.

Resource list:Dimensional Modeling book

note: I haven’t read this last book and it’s slightly outdated. Storage is cheaper than ever and denormalization of data warehouses is becoming more common.

3. Fundamental Skill: ETL

ETL is everywhere. The bad news is that nobody likes writing and debugging ETL. The good news is that opportunities abound, therefore, jobs abound if you can build ETL skills at your current job.

First Start Automating workflows with Python

Opportunities for automationIf you can connect to a database through clicking, you can probably connect to a database through scripting

If you have to open Excel even once

If you have to click your mouse to manipulate data

If you have reports that you currently manually send

But even if you’re cutting employer costs, ambition doesn’t always have a welcome home. Just like people hide GRE prep books from bad jobs, you can keep your scripting on your local machine.

Ideally, you don’t have to hide. A peaceful transition to a Data Engineering role in your current company would be awesome. However, if you are in a hostile environment, keep your code on your local machine and don’t push for it to be “in production”. Automate your stuff, brag about the cost-savings on your resume and hold your cards close to your chest if you’re somewhere that wants you to keep your head down.

Then move on to Airflow

There are three great Data Engineering blog posts written by Robert Chang and the second of three, focuses on Airflow as an ETL tool. Airflow is a bit newer than Python and SQL, so its staying power is less secure. However, I feel comfortable recommending this software at least for the next few years (2019+).

As far as I know, there aren’t any good Airflow books or tutorials out there. The best way to learn is to take existing Python scripts and move them over to Airflow, which uses Python anyway.

While you move your scripts to Airflow, read, re-read, and read the Airflow documentation. I must emphasize, slowly reading documentation is critical to learning Open Source Software that’s a bit on the newer side, like Airflow.

Modular-izing and scheduling your scripts will unlock a deeper level of talent for ETL that is valuable to employers.

Resource list:ETL Airflow specific blog post by Robert Chang

Airflow Documentation

4. Extra Data Engineering Skills and/or Shibboleths

When you interview, as we are humans and not computers, you are evaluated both verbally and non-verbally. Non-verbal signals that you belong are something we don’t acknowledge enough, in my opinion.

A shibboleth (/ˈʃɪbəlɛθ, -ɪθ/ (listen))[1][2] is any custom or tradition, usually a choice of phrasing or even a single word, that distinguishes one group of people from another.[3][4][5] Shibboleths have been used throughout history in many societies as passwords, simple ways of self-identification, signaling loyalty and affinity, maintaining traditional segregation, or protecting from real or perceived threats.

Notorious Software Engineering shibboleths include algorithm and data structures interview questions that are never used on the job. Regardless of actual importance, these shibboleths correlate with a perception that you belong in the job you’re applying for.

Out of the list of skills, being able to programmatically extract or put data with APIs with `requests` is probably the most important. It is how data engineers avoid manual processes, i.e. downloading CSVs through clicks.

Here are some shibboleths that are ordered by actually important, to easily learned on the job.

Python Requests library / Familiarity with APIs

Bash terminal expertise

Git beyond a handful of memorized commands

Linux/Unix OS so Airflow installation is much easier

Ops-Infrastructure software like Ansible

Vim/Emacs for code editing

Resource list:Intro to APIs by Zapier

Intro to AWS for Newbies : note I haven’t read this

Python Requests documentation

5. Finale: Core Skills

This post focuses on the technical skills needed. Technical skills may get you interviews and possibly even a job. If getting a job is what you’re mostly concerned with, I completely empathize. However, core skills like; communication, leadership, curiosity, etc. are what will solidify your success in any craft.

As a Data Engineer, my job is to make Data Analysts/Scientists as efficient as possible. In my opinion, it is a service focused craft. Helping others succeed is my own success. Having said that, I hope this post can provide a guide for those looking to succeed in Data Engineering.